Amazon introduced AWS Lambda in 2014 as an event-driven, serverless computing platform. Developers could write a function in one of the supported programming languages, upload it to AWS, and the function will be invoked on the configured events. In late 2018, custom runtime support was added, which allowed developers to use any language of their choice. Till late 2020, zip archives of application code/binaries was the only supported deployment option. If your application had dependencies or reusable components, they could be added as Lambda layers, but you still had to package it as a zip file.

In Dec 2020, AWS announced that Lambda now supports packaging serverless functions as container images. This means that you can deploy a custom Docker or OCI image as an AWS Lambda function as long as it includes the Lambda Runtime API. AWS provides base images for all the supported Lambda runtimes (Python, Node.js, Java, .NET, Go, Ruby) so that you can easily add your code and dependencies. It supports container images up to 10 GB in size.

Media Transcoder Lambda

Let’s build a simple media transcoder function that utilizes the FFmpeg library and runs in a container.

We will build a Docker image based on AWS Lambda image for Go.

We will include a static build of FFmpeg, and then include a handler function written in Go that invokes FFmpeg to transcode media files to WebM format.

To keep this simple, we will configure the function to be called when media files are uploaded to an S3 bucket. On the PUT event, the lambda function will be triggered and a WebM file will be created which will be copied to the same bucket.

Let’s use a multi-stage Docker build that starts off with downloading the static FFmpeg build.

FROM public.ecr.aws/a1p3q7r0/alpine:3.12.0 as ffmpeg-builder

RUN cd /usr/local/bin && \

mkdir ffmpeg && \

cd ffmpeg/ && \

wget https://johnvansickle.com/ffmpeg/builds/ffmpeg-git-amd64-static.tar.xz && \

tar xvf *.tar.xz && \

rm -f *.tar.xz && \

mv ffmpeg-git-*-amd64-static/ffmpeg .We will create a handler using Go, that responds to S3 events. Validations are skipped to keep this simple.

func main() {

lambda.Start(handler)

}

func handler(_ context.Context, s3Event events.S3Event) error {

record := s3Event.Records[0]

key := record.S3.Object.Key

sess, _ := session.NewSession(&aws.Config{Region: &record.AWSRegion})

downloader := s3manager.NewDownloader(sess)

file, err := os.Create(fmt.Sprintf("/tmp/%s", key))

if err != nil {

panic(err)

}

defer file.Close()

_, err = downloader.Download(file,

&s3.GetObjectInput{

Bucket: &record.S3.Bucket.Name,

Key: &key,

})

if err != nil {

panic(err)

}

log.Printf("Downloaded %s", file.Name())

outputFile := strings.Replace(file.Name(), filepath.Ext(file.Name()), ".webm", 1)

cmd := exec.Command("ffmpeg", "-i", file.Name(), outputFile)

out, err := cmd.CombinedOutput()

if err != nil {

log.Fatalf("cmd.Run() failed with %s\n", err)

}

log.Printf("Execution output:\n%s\n", string(out))

output, err := os.Open(outputFile)

if err != nil {

panic(err)

}

_, err = s3.New(sess).PutObject(&s3.PutObjectInput{

Bucket: &record.S3.Bucket.Name,

Key: aws.String(filepath.Base(outputFile)),

Body: output,

})

log.Printf("Copied %s to %s", outputFile, record.S3.Bucket.Name)

return nil

}The handler downloads the file from S3 to the writable /tmp directory of the Lambda container and uses the os/exec package to run the ffmpeg command which we will include in the Lambda image shortly.

The output file has the same name as the input file, but with a .webm extension. This file is then copied back to the S3 bucket using the PutObject call.

Let’s update our multi-stage Docker build to compile this Go handler and copy the build output along with the ffmpeg library.

FROM public.ecr.aws/bitnami/golang:1.15.5 as function-builder WORKDIR /build COPY . . RUN go build -o media-converter main.go FROM public.ecr.aws/lambda/go:latest COPY --from=ffmpeg-builder /usr/local/bin/ffmpeg/ffmpeg /usr/local/bin/ffmpeg/ffmpeg RUN ln -s /usr/local/bin/ffmpeg/ffmpeg /usr/bin/ffmpeg COPY --from=function-builder /build/media-converter /var/task/media-converter CMD [ "media-converter" ]

AWS provides a base image for Go-based lambda functions at public.ecr.aws/lambda/go and we copy the ffmpeg library to this image and create a symlink in the /usr/bin directory. The handler output binary, which we named as media-converter is copied to the /var/task location. And finally, CMD is set to the name of output binary, which is media-converter.

And that completes our lambda!

Let’s push this image to an Elastic Container Registry(ECR).

Create a repository for this image if you don’t have one.

aws ecr create-repository --repository-name media-transcoder-lambda

Build the Docker image.

docker build -t media-transcoder-lambda .

Take a note of the Image ID of your build. Tag the image to the ECR that you created previously.

docker tag 3243d0d2dfa2 9xxxxxxxxx6.dkr.ecr.us-east-1.amazonaws.com/media-transcoder-lambda

Login to your ECR.

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin 9xxxxxxxxxx6.dkr.ecr.us-east-1.amazonaws.com

And finally, push the image to your ECR.

docker push 9xxxxxxxxxx6.dkr.ecr.us-east-1.amazonaws.com/media-transcoder-lambda

Let’s create a Lambda function that makes use of this image.

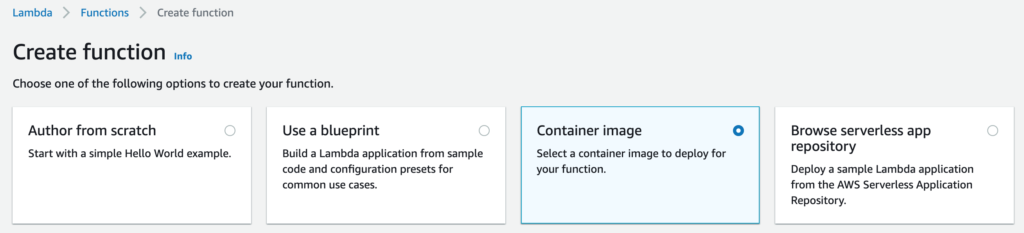

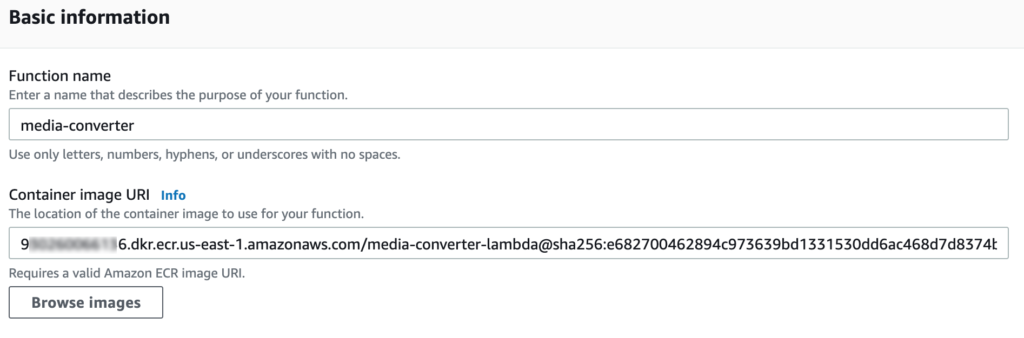

Click the Create Function button and choose the Container image option.

And select the image URI that you just pushed.

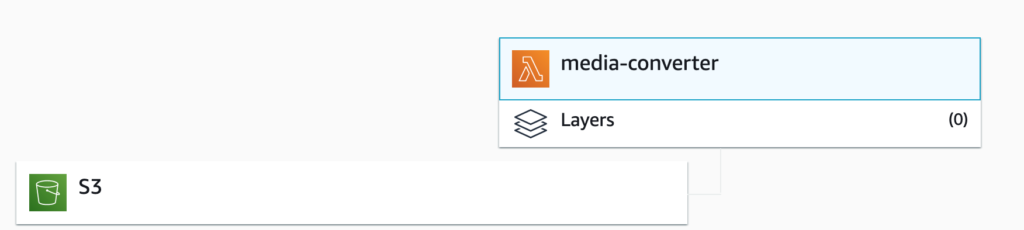

Create the function, and link it up to an S3 bucket trigger.

Make sure that you update the Lambda execution role with a policy to access your S3 bucket.

Increase the execution timeout of your function to a high value since the transcoding may take some time, and it needs to upload the data back to S3.

You can configure the trigger so that the function is triggered only for PUT and only for specific file extensions like .mp4.

Try uploading a file to your S3 bucket and hopefully, you will see a .webm equivalent in the same bucket after few minutes.

If not, check your CloudWatch logs.

Source Code

You can check the working source code here.